How to Deploy BLOOM 560M to Production

February 22, 2023Deprecated: This blog article is deprecated. We strive to rapidly improve our product and some of the information contained in this post may no longer be accurate or applicable. For the most current instructions on deploying a model like BLOOM to Banana, please check our updated documentation.

In this tutorial we'll show you how you can run BLOOM 560M in production on serverless GPUs. This is the BLOOM 560M model source code we are using in the tutorial.

Note: we are using the BLOOM 560M model because the large BLOOM model is too big to run on Banana's serverless platform at this time.

Let's begin!

What is BLOOM?

BLOOM is a popular open source text generation model. BLOOM stands for BigScience Large Open-science Open-access Multilingual Language Model. This model was developed by BigScience, a group of over 1,000 scientific contributors from across the world.

One of the cool things about BLOOM is that it can generate text in 46 languages and 13 programming languages.

Video: BLOOM Deployment Tutorial

Video Notes & Resources:

We mentioned a few resources and links in the tutorial, here they are.

- Creating a Banana account (click Sign Up).

- BLOOM Banana template.

In the tutorial we used a virtual environment on our machine to run our demo model. If you are wanting to create your own virtual environment use these commands (Mac):

- create virtual env:

python3 -m venv venv - start virtual env:

source venv/bin/activate - packages to install:

pip install banana_dev

Guide: How to Deploy BLOOM 560M on Serverless GPUs

1. Fork Banana's Serverless Framework Repo

Fork our serverless framework into a private repo as a starting point. This repository is Banana's serverless framework, we'll use it as the base repo to run BLOOM. You can technically use this framework to deploy any custom model to Banana.

2. Customize Repository to run BLOOM

Next, we need to customize the repository to run BLOOM 560M instead of the default BERT model. To do this, you'll need to read our Banana docs to understand how each file operates within the Serverless Framework.

In essence, you'll need to:

- Make sure the download.py file downloads BLOOM 560M

- Load BLOOM 560M within the init()

- Update the

inference()block to run BLOOM 560M

3. Create Banana Account and Deploy BLOOM

After you have adjusted your code in the repo, you'll need to test. We highly recommend testing your code before deploying to Banana. The easiest way to test your code is to use Brev (follow this tutorial).

From here, login to your Banana Dashboard and click the "New Model" button.

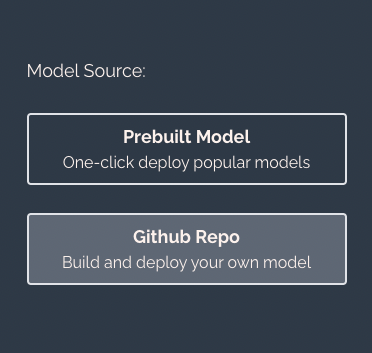

A popup will appear looking like this:

Select "GitHub Repo", and choose the repository that you made for BLOOM. Click "Deploy" and the model will start to build. The build process can take up to 1 hour so please be patient.

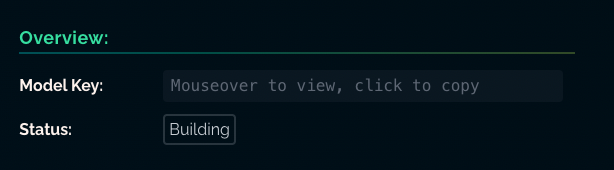

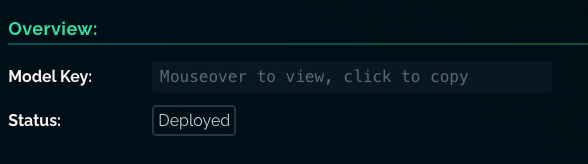

You'll see the Model Status change from "Building" to "Deployed" when it's ready to be called.

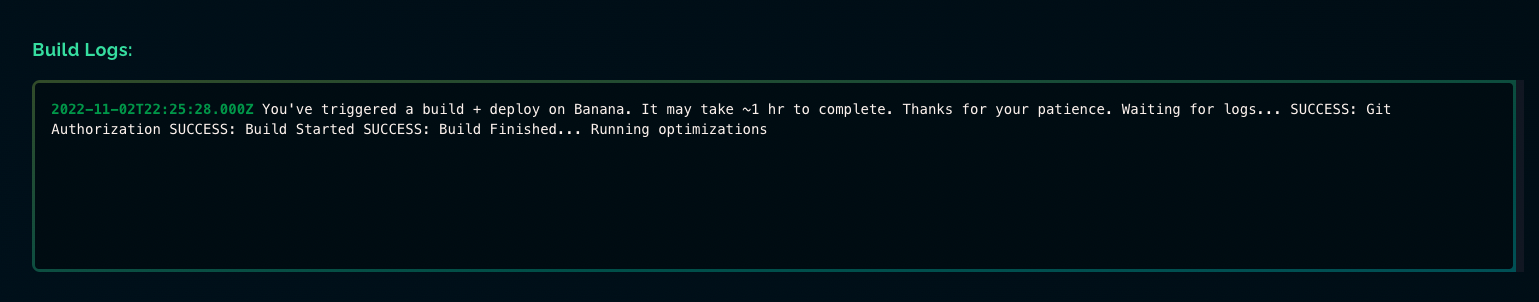

You can also monitor the status of your build in the Model Logs tab.

4. Call your BLOOM Model

Once your model is built, it's time to run it in production! To call your model choose which Banana SDK you would like to use (Python, Node, Go).

Congrats on calling your model in production! You are now running BLOOM 560M on serverless GPUs.

Wrap Up

Let us know if you have questions or want to chat about BLOOM. The best place to do that would be on our Discord or by tweeting us on Twitter. What other machine learning models would you like to see a deployment tutorial for? Let us know!