How to Deploy BERT model to Production on Serverless GPUs

November 02, 2022Deprecated: This blog article is deprecated. We strive to rapidly improve our product and some of the information contained in this post may no longer be accurate or applicable. For the most current instructions on deploying a model like BERT to Banana, please check our updated documentation.

In this tutorial we'll demonstrate how you can deploy the BERT machine learning model to production on serverless GPUs. For reference, we are using the BERT-base-uncased model from HuggingFace.

This tutorial can be completed in less than 20 minutes (minus build time). Let's dive in!

How to Deploy BERT on Serverless GPUs

1. Fork Banana's Serverless Framework Repo

Take this repository and fork it to a private repo so you can make it your own. This repository is the base serverless framework that enables you to deploy any custom model to Banana.

Lucky for you, the demo model we used in this repository framework is BERT, so there isn't anything to change in this repo unless you are planning to do a bunch of customizing.

Note: If you were deploying a custom model to Banana other than BERT, you would still start with this same repository but you would need to swap out the BERT model and customize code within the repo to make it function for your model of choice. You can learn more about the Serverless Framework and how each file operates here.

2. Create Banana Account and Deploy BERT

Once the repo is forked, simply login to your Banana Dashboard and click the "New Model" button.

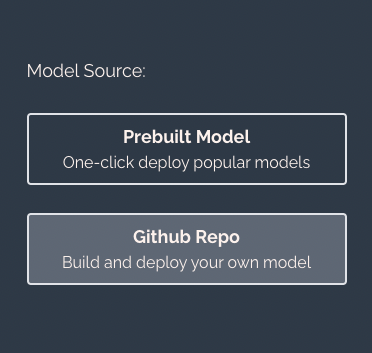

A popup will appear looking like this:

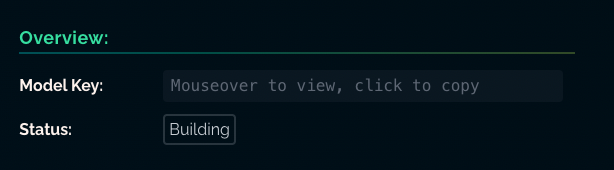

Select "GitHub Repo", and choose the GitHub repository that you just made for BERT. Click "Deploy" and the model will start to build. The build process can take up to 1 hour so be patient, though it usually is much faster than that.

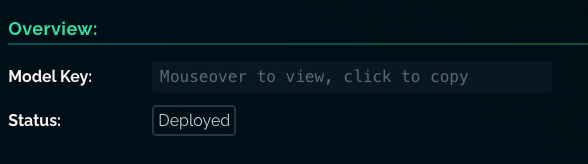

You'll see the Model Status change from "Building" to "Deployed" when it's ready to be called.

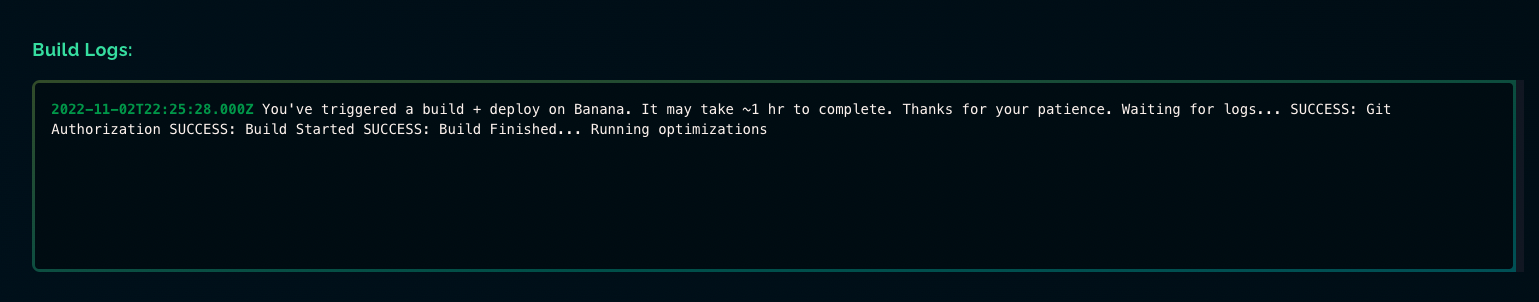

You can also monitor the status of your build in the Model Logs tab.

3. Call your BERT Model

Once your model is built, we're ready to use it in production! To call your model first decide which Banana SDK you would like to use (Python, Node, Go).

We'll use Python in this tutorial.

Simply head to your code editor (we're using VS Code), and call your model using the Banana SDK!

For this tutorial, our code to call BERT looked like this:

import banana_dev as banana api_key = "YOUR_API_KEY"

model_key = "YOUR_MODEL_KEY"

model_inputs = {'prompt': 'Hello I am a [MASK] model.'} out = banana.run(api_key, model_key, model_inputs) print(out)

Wrap Up

We'd love to chat if you have any questions or want to talk shop about BERT. The best place to do that would be on our Discord or by tweeting us on Twitter. What other machine learning models would you like to see a deployment tutorial for? Let us know!